Good morning, AI enthusiasts. Anthropic just turned Claude into an operating system. Apps like Slack, Canva, and Asana now run directly inside the chat interface, letting users complete actual work without ever leaving the conversation.

The shift mirrors Tencent's WeChat playbook in China, embedding services into the platform rather than linking out. With enterprises moving from chat to agents, has Anthropic just built the coordination layer that defines the next decade of workplace software?

In today's recap:

Anthropic launches interactive apps inside Claude chat

Microsoft's Maia 200 chip challenges Nvidia's dominance

Run Claude Code locally with Ollama

Alibaba's Qwen3 beats GPT-5.2 on reasoning tests

4 new AI tools, prompts, and more

ANTHROPIC

Anthropic launches interactive Claude apps

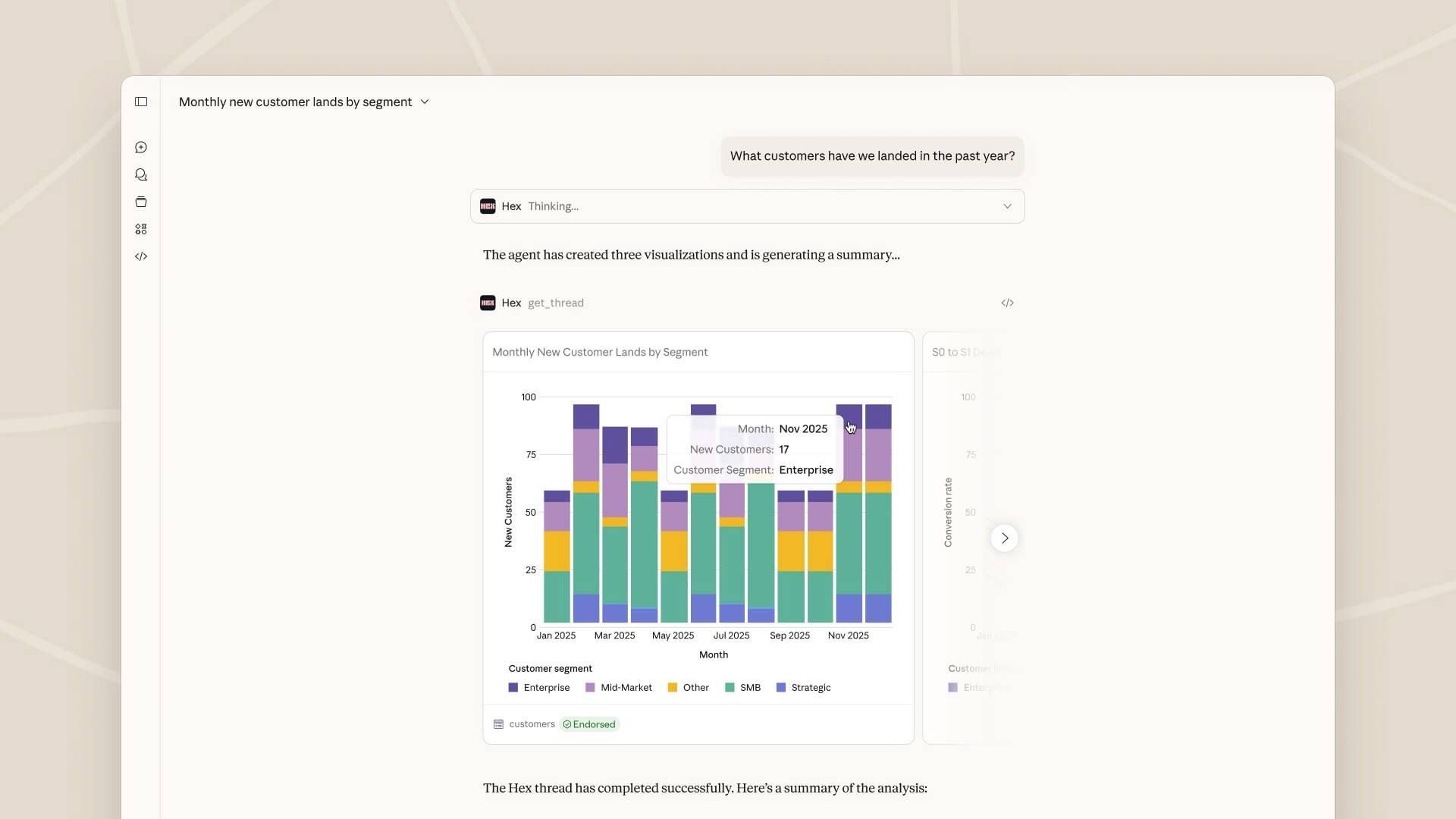

Recaply: Anthropic just introduced MCP Apps, the first official extension to Model Context Protocol that lets users interact with tools like Slack, Canva, and Figma directly inside Claude chat instead of reading text summaries.

Key details:

Tools now open as interactive apps inside Claude, allowing users to format Slack messages, customize Canva decks in real time, and manage Asana projects without leaving the conversation.

MCP Apps work across Claude, ChatGPT, Goose, and Visual Studio Code, with Salesforce integrations coming soon, marking the first time a single MCP tool works across multiple AI platforms without client-specific code.

Apps run in sandboxed iframes with restricted permissions and auditable messages, with hosts able to require explicit approval for any tool calls initiated by the UI.

The feature is available now for Pro, Max, Team, and Enterprise subscribers at claude.ai/directory, with Cowork integration launching soon to let the agent access cloud files and work projects.

Why it matters: Claude is moving from chat assistant to operating system. MCP Apps shifts the interface from answering questions to becoming a workspace where users complete actual tasks inside the conversation. This follows the path Tencent's WeChat took in China, embedding services directly into the platform rather than linking out. The timing matters because enterprises are transitioning from chat to agents, and the coordination layer between tools has been the biggest gap.

PRESENTED BY HUBSPOT

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.

MICROSOFT

Microsoft unveils Maia 200 inference chip

Recaply: Microsoft just rolled out Maia 200, its second-generation AI chip built on TSMC's 3nm process with 140B transistors, delivering 10 petaFLOPS in FP4 and 30% better performance per dollar than current fleet hardware.

Key details:

Maia 200 delivers 10 petaFLOPS in 4-bit precision and 5 petaFLOPS in 8-bit performance within a 750W envelope, with 216GB HBM3e memory at 7 TB/s and 272MB on-chip SRAM.

The chip delivers three times the FP4 performance of Amazon's third-generation Trainium and FP8 performance above Google's seventh-generation TPU, with each accelerator exposing 2.8 TB/s of dedicated scale-up bandwidth.

Microsoft deployed Maia 200 in its Iowa datacenter this week, with Arizona launching next, and the chip went from first silicon to production deployment in less than half the time of comparable AI infrastructure programs.

The chip will serve GPT-5.2 models from OpenAI through Microsoft Foundry and Microsoft 365 Copilot, with SDK preview available now at aka.ms/Maia200SDK including PyTorch support and Triton compiler.

Why it matters: Microsoft isn't just reducing dependence on Nvidia. It's building a complete AI stack from silicon to software, with Triton taking on Cuda's role as the programming interface. The 30% cost advantage matters because inference is where AI companies burn cash at scale, and custom chips give hyperscalers pricing power that Nvidia customers don't have. Google proved this model with TPUs. Amazon's building it with Trainium.

TUTORIAL

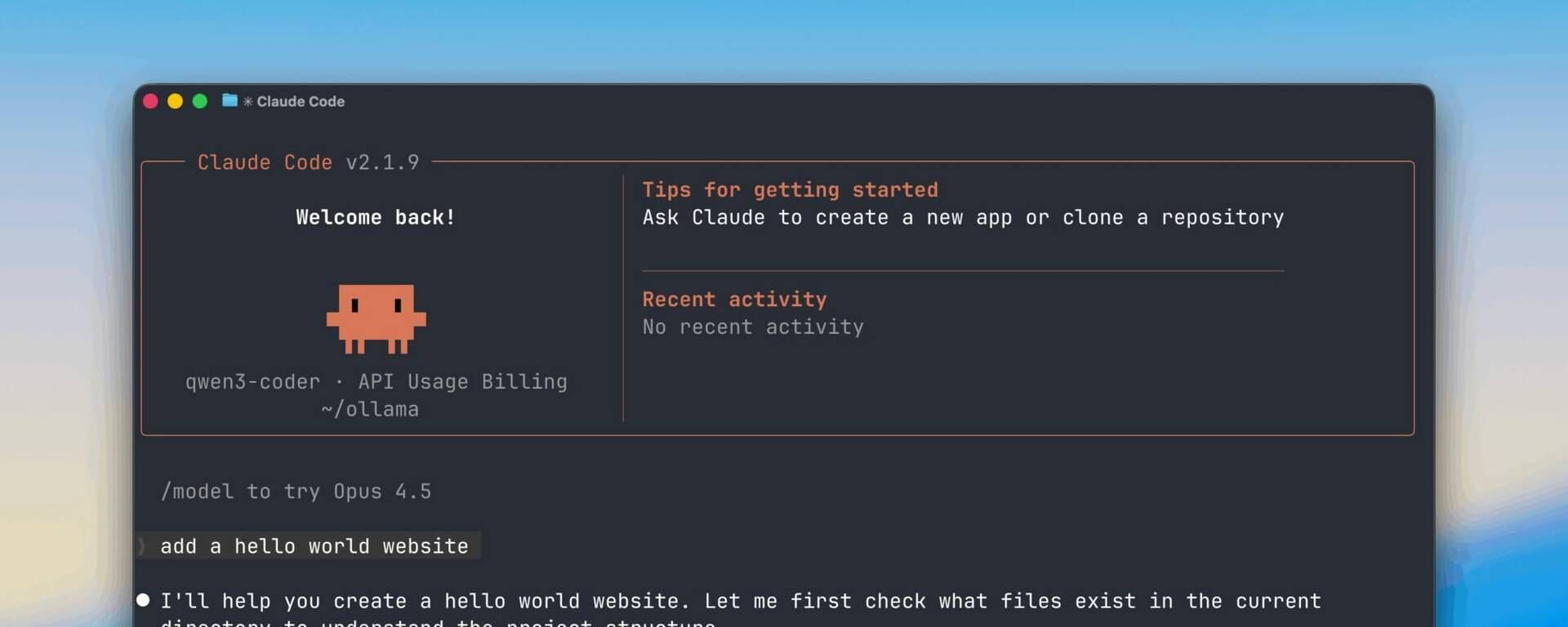

Run Claude Code locally with Ollama (100% free and private)

Recaply: In this tutorial, you will learn how to run Claude Code on your machine using Ollama and local open-source models. This setup gives you complete privacy with no API costs or data tracking.

Step-by-step:

Download Ollama from ollama.com and install it, then open your terminal and run "ollama run qwen2.5-coder:7b" to download a coding model (downloads automatically on first use)

Install Claude Code by running the install command: on Mac or Linux type "curl -fsSL https://claude.ai/install.sh | bash", on Windows PowerShell type "irm https://claude.ai/install.ps1 | iex"

Point Claude Code to Ollama by setting these environment variables in your terminal: "export ANTHROPIC_BASE_URL=http://localhost:11434" then "export ANTHROPIC_AUTH_TOKEN=ollama"

Navigate to any project folder and launch Claude with "claude --model qwen2.5-coder:7b", which starts the local AI coding assistant in your terminal

Test it with a prompt like "Add a hello world website" and watch Claude read files, write code, and run commands entirely on your machine with no cloud processing

Pro tip: High-performance systems can use qwen2.5-coder:32b, which scores 73.7 on Aider benchmarks and matches GPT-4o. Lower-RAM machines work well with qwen2.5-coder:3b or gemma:2b while staying fully private.

ALIBABA

Qwen3-Max-Thinking beats GPT-5.2 on reasoning

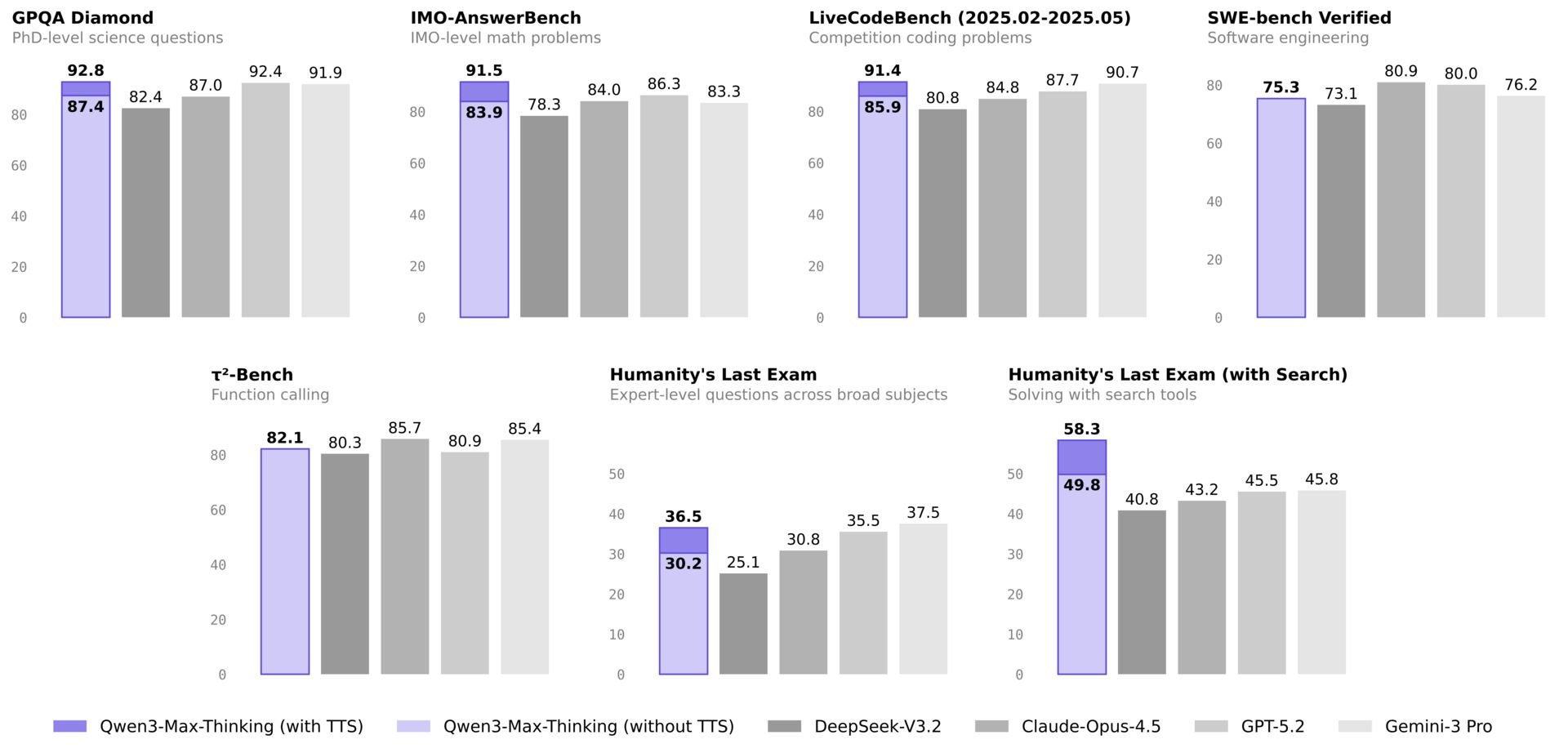

Recaply: Alibaba just launched Qwen3-Max-Thinking, its flagship reasoning model that scored 58.3 on HLE tool-enabled reasoning tests, outperforming GPT-5.2-Thinking at 45.5 and Gemini 3 Pro at 45.8.

Key details:

Qwen3-Max-Thinking uses test-time scaling that extracts experience from prior reasoning to iteratively self-improve within the same context, avoiding computational redundancy from repeatedly deriving known conclusions.

The model achieved 98.0 on HMMT Feb 25 math tests compared to GPT-5.2's 99.4 and Gemini 3 Pro's 97.5, and scored 85.9 on LiveCodeBench v6 against GPT-5.2's 87.7 and Gemini 3's 90.7.

Qwen's open-source family surpassed 200,000 derivative models on Hugging Face with over 1 billion cumulative downloads, making it the first open-source family to hit this milestone and surpassing Meta's Llama series.

The model is available now at chat.qwen.ai with adaptive tool use for search and code interpreter, and through Alibaba Cloud API as qwen3-max-2026-01-23 with OpenAI-compatible endpoints.

Why it matters: China's AI just closed the gap with Silicon Valley's flagship models. Qwen3-Max-Thinking's 58.3 HLE score versus GPT-5.2's 45.5 isn't marginal improvement, it's generational leadership on tool-enabled reasoning. The open-source dominance matters too: MIT research shows China's AI adoption share hit 17.1%, surpassing America's 15.8% for the first time.

NEWS

What Matters in AI Right Now?

Anthropic rolled out Claude in Excel to Pro users, letting them analyze data, generate formulas, and automate spreadsheet tasks without switching tabs or leaving Excel.

Anthropic CEO Dario Amodei published an essay on the risks posed by powerful AI, focusing on how to navigate the adolescence of transformative technology.

Google started rolling out Personal Intelligence in AI Mode for US subscribers, connecting Search to Gmail and Google Photos to provide personalized recommendations based on user interests and preferences.

Webhound launched Reports, a new deep research product that ranked #1 on DeepResearch Bench by optimizing for depth over speed with long-running agents.

Synthesia raised $200M in funding at a $4B valuation, with the round allowing employees to cash in on secondary shares in the AI video generation company.

Nvidia launched the Earth-2 family of open models and tools, marking the world's first fully open AI weather forecasting models for climate research.

Moonshot AI launched Kimi K2.5, an open-source visual agentic model scoring 50.2% on HLE and 74.9% on BrowseComp, with Agent Swarm beta supporting up to 100 parallel sub-agents and 1,500 tool calls.

TOOLS

Trending AI Tools

⚙️ MCP Apps - First official MCP extension letting tools return interactive UIs like dashboards and forms directly in conversation

🤖 Qwen3-Max-Thinking - Alibaba's flagship reasoning model with adaptive search and code interpreter, matching GPT-5.2-Thinking on key benchmarks

🤖 Kimi K2.5 - Open-source visual agentic model with Agent Swarm supporting up to 100 parallel sub-agents and 1,500 tool calls

💬 Clawdbot - Personal AI assistant running 24/7 on Discord and Telegram with persistent memory and multi-step automation

PROMPTS

Generate Viral Content Hooks

#CONTEXT:

Adopt the role of viral hook architect. The user needs hooks that break through the noise by targeting low-probability, contrarian angles that mainstream content creators miss. Traditional hook formulas have been exhausted everyone's using the same templates, creating a sea of sameness. The user requires hooks sampled from the tails of probability distributions that feel unexpected, insight-driven, and challenge conventional narratives. Standard AI responses tend toward safe, mainstream takes that blend into the background.PS: This is not the full prompt. Click the button below to access the complete prompt.

Have a favorite prompt? Tell us about it or rate today’s prompt, click here.

EVENTS

Claude Code Meetup Danver: Feb 17, 2026 • Boulder, Colorado

MCP Connect Day: Feb 05,2026 • Paris

Paris AI Hackathon: Feb 07-08, 2026 • Paris

Voice Agent Hackathon: Feb 07-08, 2026 • San Francisco, CA

v0 Studio: Jan 29, 2026 • San Francisco, CA

Firecrawl Meetup @ Spin: Jan 29, 2026 • San Francisco, CA

Gemini 3 SuperHack: Jan 31, 2026 • San Francisco, CA

Hack the Stackathon: Jan 31, 2026 • San Francisco, CA

Raycast Stockholm Hackathon: Feb 06, 2026 • Stockholm, Sweden

Build India: Feb 15, 2026 • Bengaluru, India

🧡 Enjoyed this issue?

🤝 Recommend our newsletter or leave a feedback.

How'd you like today's newsletter?

Cheers, Jason